Abstract

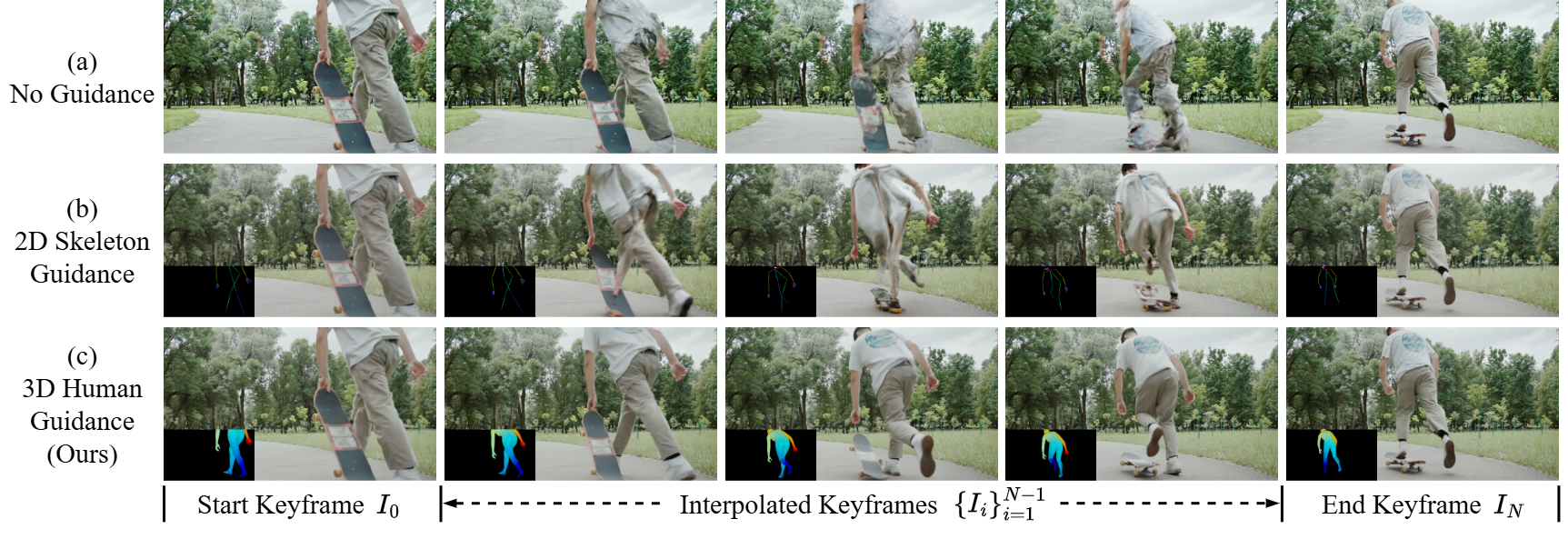

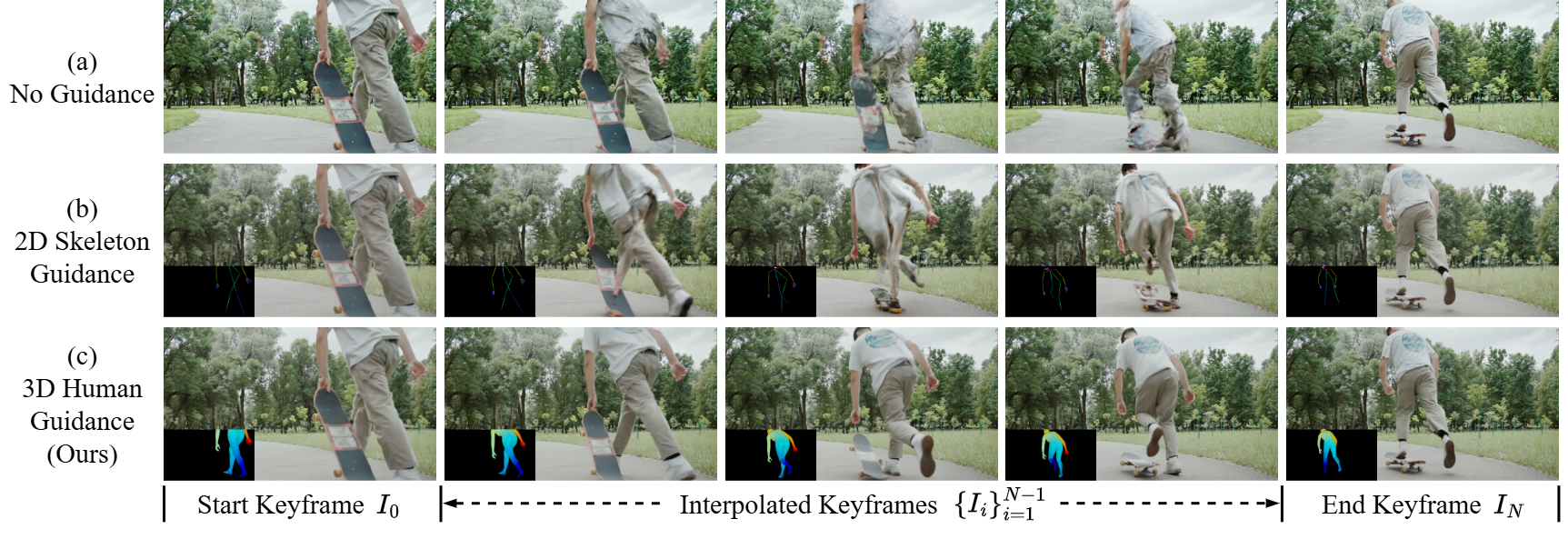

Existing interpolation methods use pre-trained video diffusion priors to generate intermediate frames between sparsely sampled keyframes. In the absence of 3D geometric guidance, these methods struggle to produce plausible results for complex, articulated human motions and offer limited control over the synthesized dynamics.

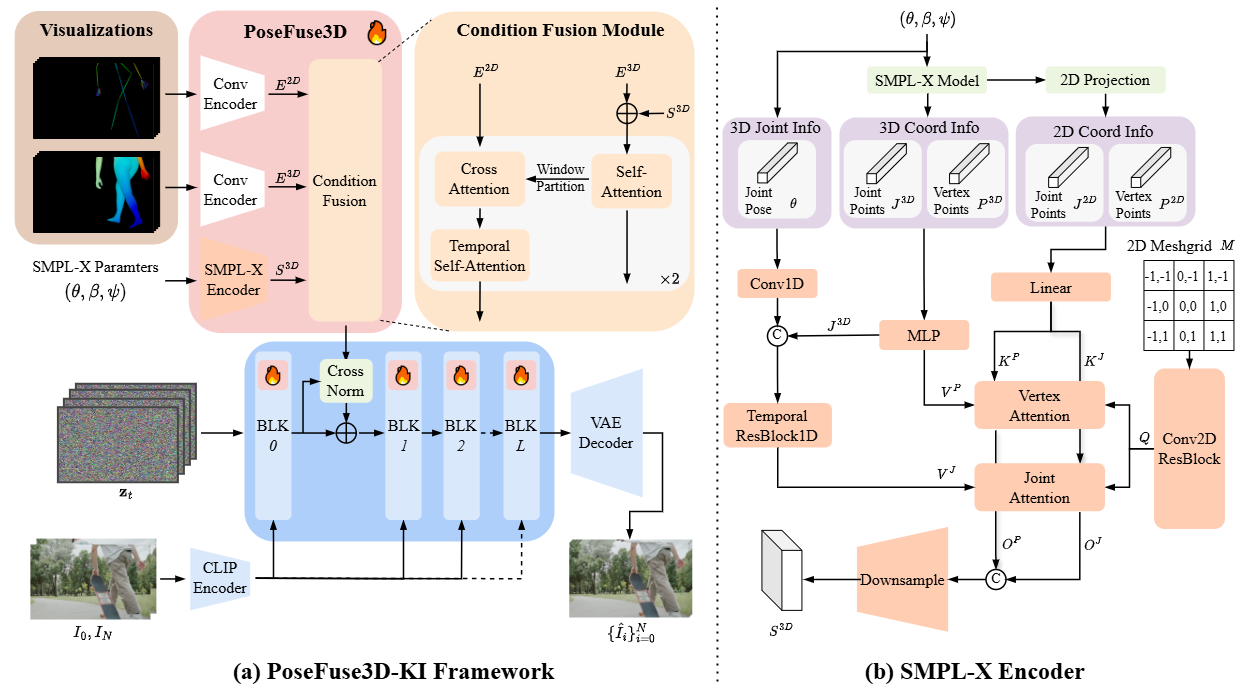

In this paper, we introduce PoseFuse3D Keyframe Interpolator (PoseFuse3D-KI), a novel framework that integrates 3D human guidance signals into the diffusion process for Controllable Human-centric Keyframe Interpolation (CHKI).

To provide rich spatial and structural cues for interpolation, our PoseFuse3D, a 3D-informed control model, features a novel SMPL-X encoder that transforms 3D geometry and shape into the 2D latent conditioning space, alongside a fusion network that integrates these 3D cues with 2D pose embeddings.

For evaluation, we build CHKI-Video, a new dataset annotated with both 2D poses and 3D SMPL-X parameters. We show that PoseFuse3D-KI consistently outperforms state-of-the-art baselines on CHKI-Video, achieving a 9% improvement in PSNR and a 38% reduction in LPIPS.

Comprehensive ablations demonstrate that our PoseFuse3D model improves interpolation fidelity.